Lenovo ThinkSystem SR630 V2 Server9,946.00 ש"ח

Lenovo ThinkSystem ST250 V3 Server7,611.00 ש"ח

Lenovo ThinkSystem ST650 V3 Server11,476.00 ש"ח

Lenovo Thinksystem ST50 V3 Server5,537.00 ש"ח

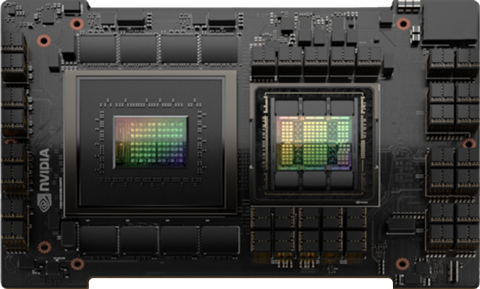

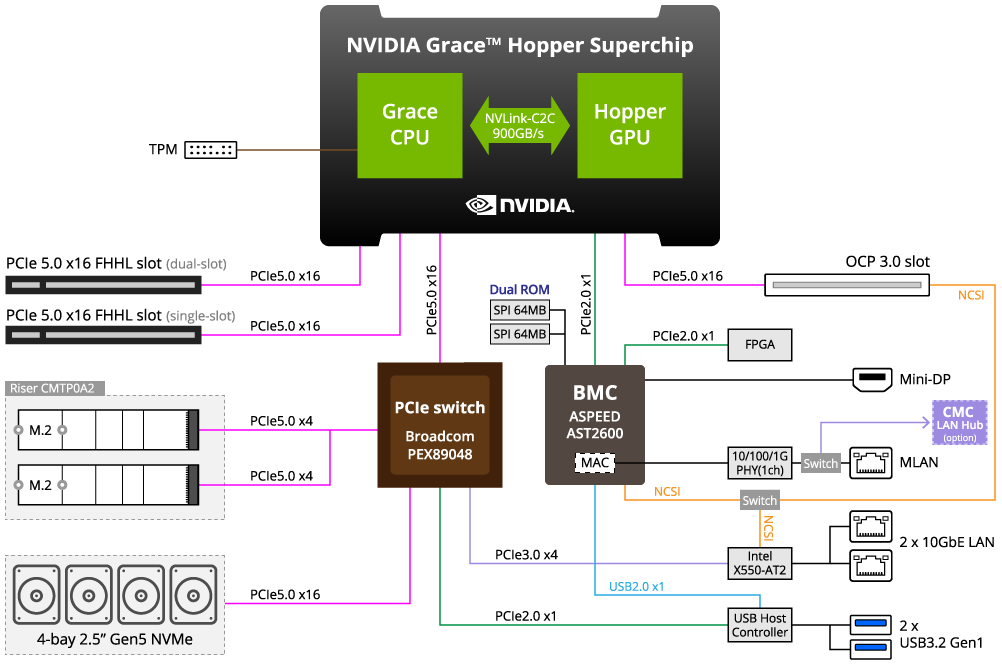

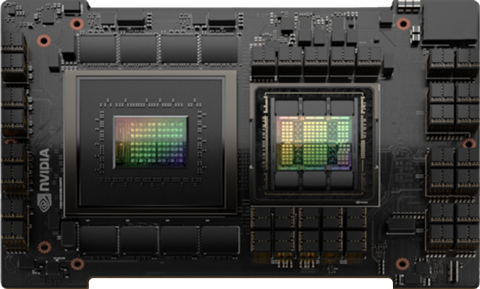

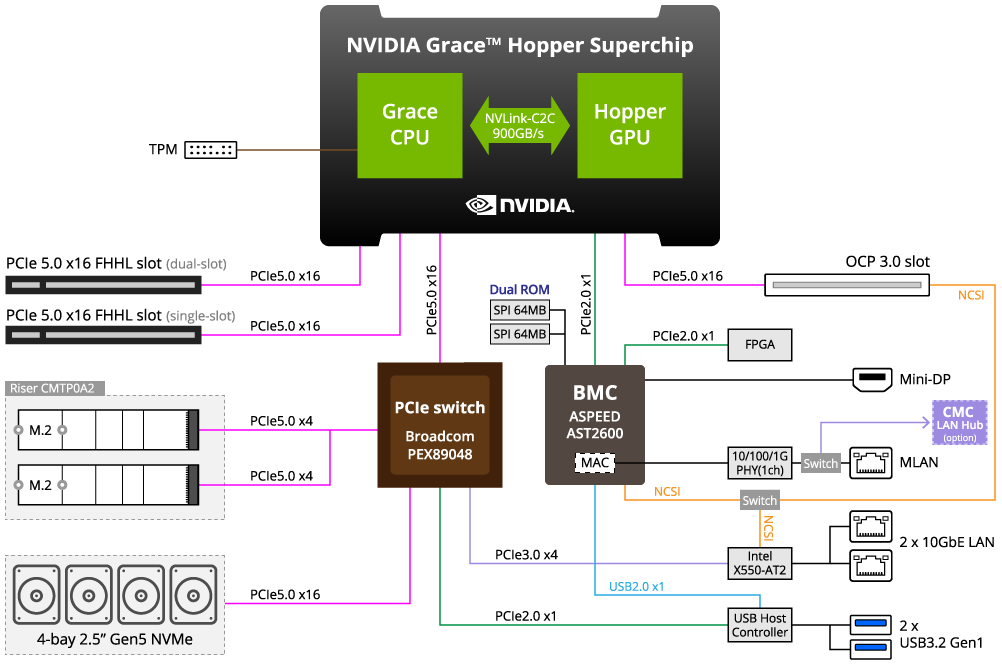

Moving beyond pure CPU applications, the NVIDIA GH200 Grace Hopper Superchip is built on a combination of an NVIDIA Grace CPU and an NVIDIA H100 GPU for giant-scale AI and HPC applications. Utilizing the same NVIDIA NVLink-Chip-to-Chip (NVLink-C2C) technology, combining the heart of computing on a single superchip, forming the most powerful computational module. The coherent memory design leverages both high-speed HBM3 or HBM3e GPU memory and the large-storage LPDDR5X CPU memory. The superchip also inherits the capability of scaling out with InfiniBand networking by adopting BlueField®-3 DPUs or NICs, forming a system connected with a speed of 100GB/s for ML and HPC workloads. The upcoming GH200 NVL32 can further improve deep learning and HPC workloads by connecting up to 32 superchips through the NVLink Switch System, a system built on NVLink switches with 900GB/s bandwidth between any two superchips, making the most use of the powerful computing chips and extended GPU memory.

Armv9.0-A architecture for better compatibility and easier execution of other Arm-based binaries.

Balancing between bandwidth, energy efficiency, capacity, and cost with the first data center implementation of LPDDR technology.

Adoption of high-bandwidth-memory for improved performance of memory-intensive workloads.

Multiple PCIe Gen5 links for flexible add-in cards configurations and system communication.

Industry-leading chip-to-chip interconnect technology up to 900GB/s, alleviating bottlenecks and making coherent memory interconnect possible.

Designed with maximum scale-out capability with up to 100GB/s total bandwidth across all superchips through InfiniBand switches, BlueField-3 DPUs, and ConnectX-7 NICs.

Bringing preferred programming languages to the CUDA platform along with hardware-accelerated memory coherency for simple adaptation to the new platform.

GIGABYTE servers are enabled with Automatic Fan Speed Control to achieve the best cooling and power efficiency. Individual fan speeds will be automatically adjusted according to temperature sensors strategically placed in the servers.

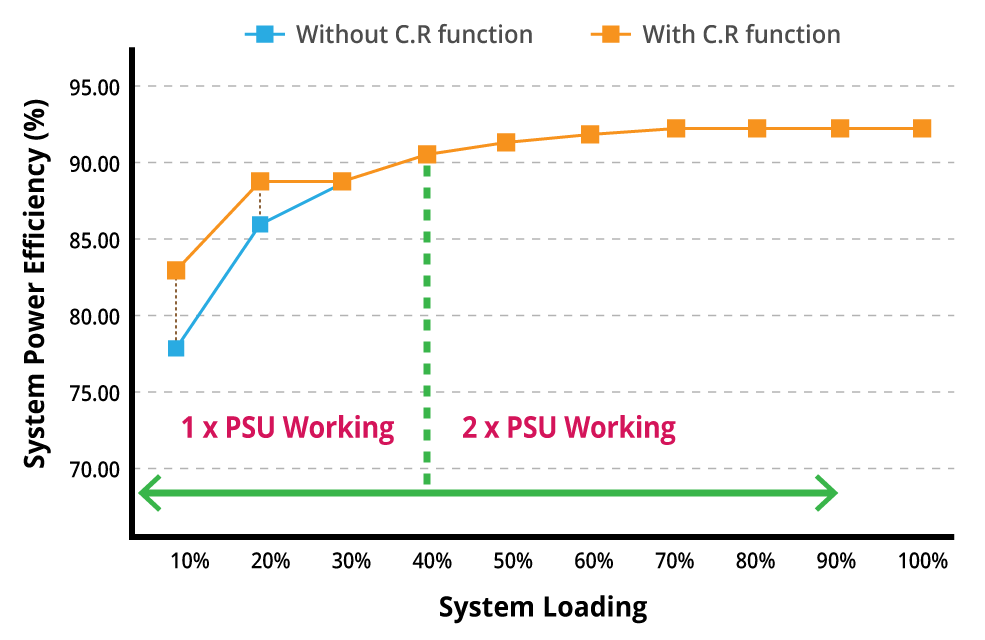

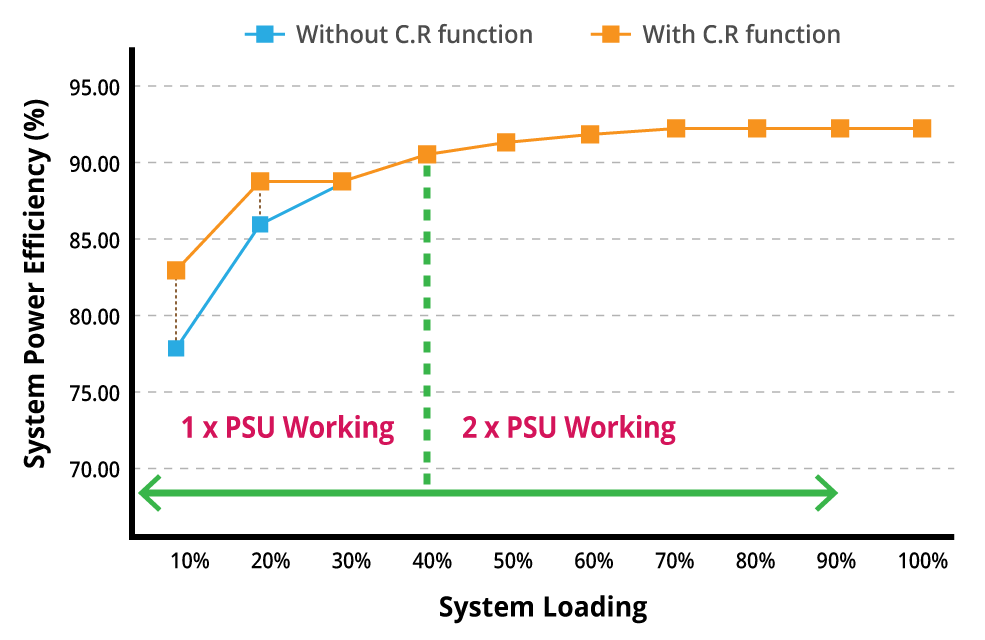

To take advantage of the fact that a PSU will run at greater power efficiency with a higher load, GIGABYTE has introduced a power management feature called Cold Redundancy for servers with N+1 power supplies. When the total system load falls lower than 40%, the system will automatically place one PSU into standby mode, resulting in a 10% improvement in efficiency.

To prevent server downtime and data loss as a result of loss of AC power, GIGABYTE implements SmaRT in all our server platforms. When such an event occurs, the system will throttle while maintaining availability and reducing power load. Capacitors within the power supply can supply power for 10-20ms, which is enough time to transition to a backup power source for continued operation.

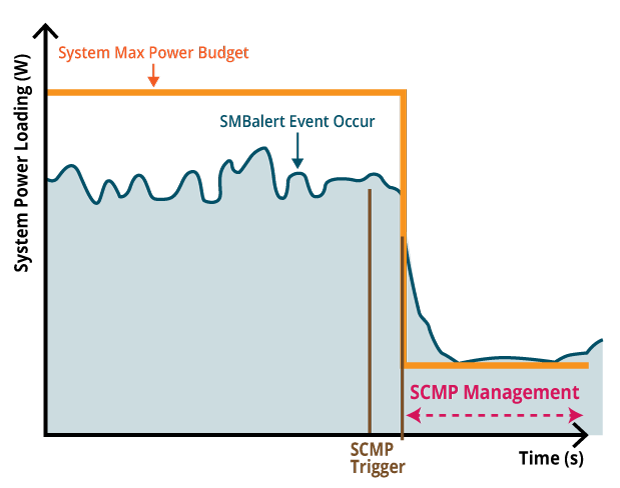

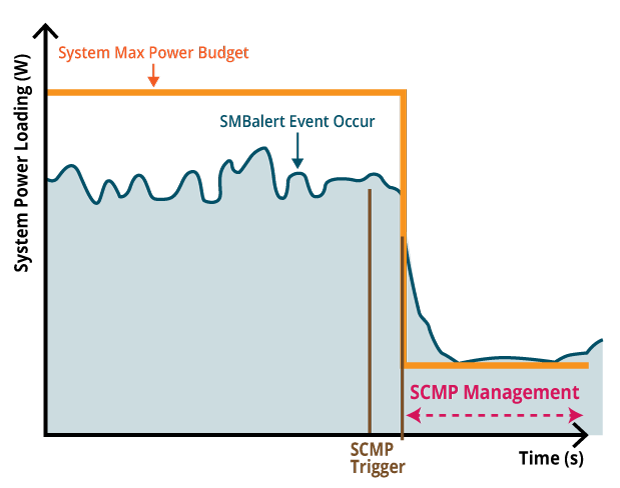

SCMP is a GIGABYTE patented feature which is deployed in servers with non-fully redundant PSU design. With SCMP, in the event of faulty PSU or overheated system, the system will force the CPU into an ultra-low power mode that reduces the power load, which prevents the system from unexpected shutdown and avoids component damage or data loss.

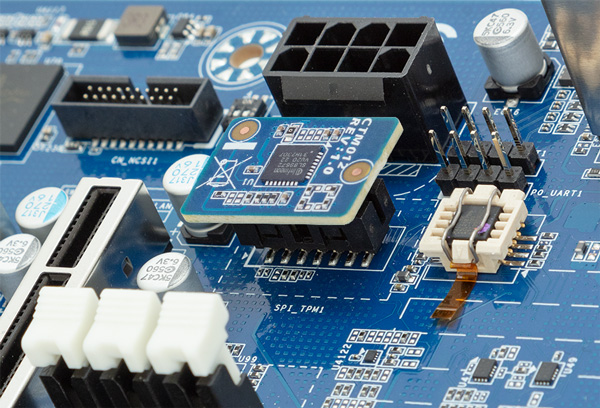

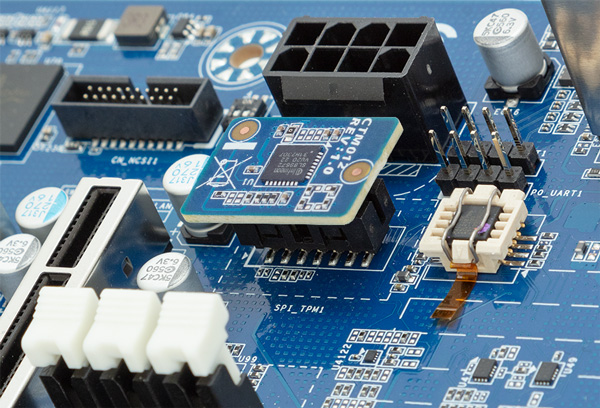

For hardware-based authentication, the passwords, encryption keys, and digital certificates are stored in a TPM module to prevent unwanted users from gaining access to your data. GIGABYTE TPM modules come in either a Serial Peripheral Interface or Low Pin Count bus.

Clipping mechanism secures the drive in place. Install or replace a new drive in seconds.

GIGABYTE offers servers that feature an onboard OCP 3.0 slot for the next generation of add on cards. Advantages of this new type include:

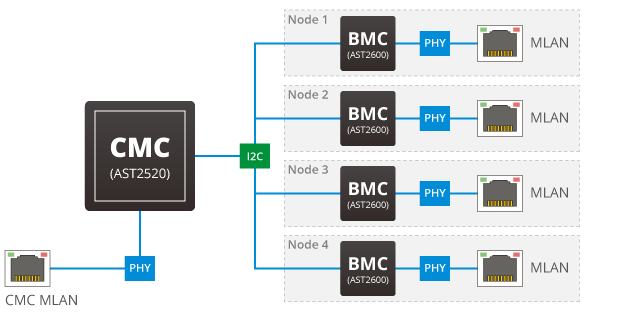

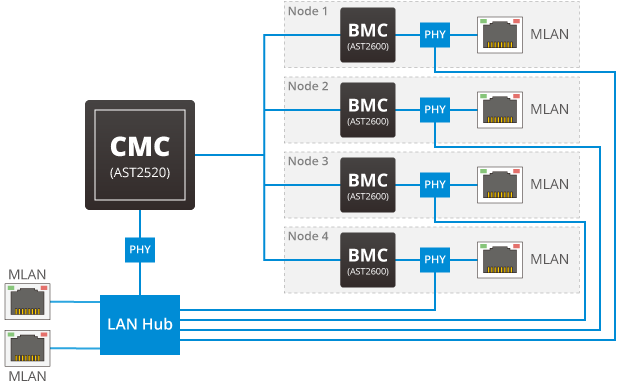

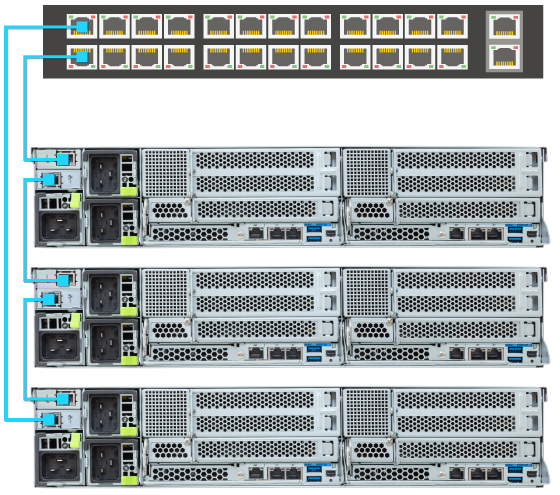

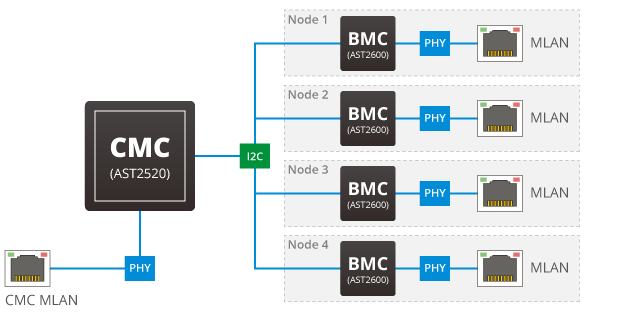

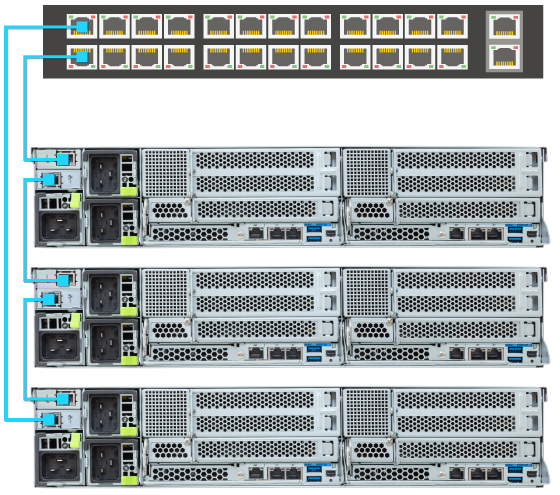

GIGABYTE H-Series servers feature CMC* for chassis-level management and multi-node monitoring by connecting nodes internally via BMC integrated on each node to the CMC. This results in easier managment as there are less cables and network switch connections needed.

* IPMI only, no iKVM functionality

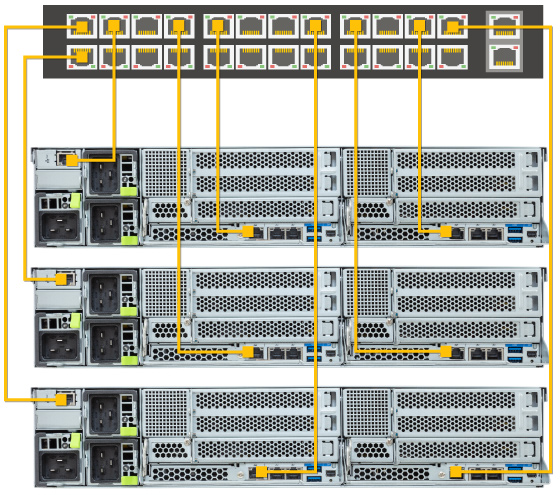

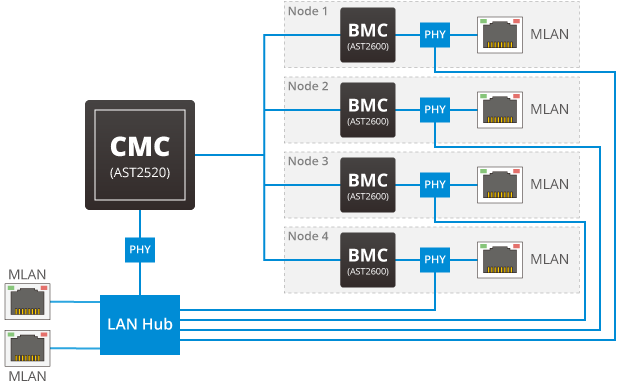

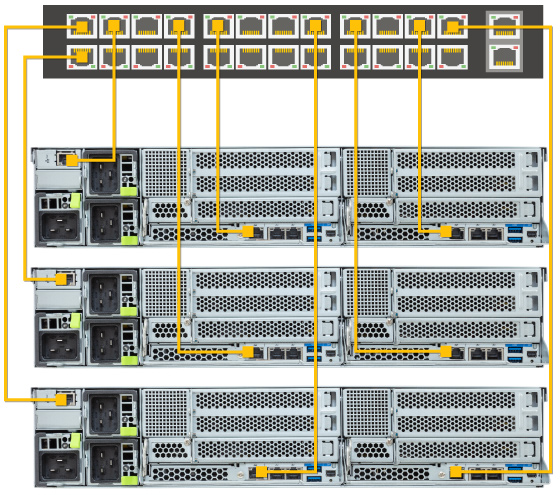

GIGABYTE H-Series servers feature the ability to create a “ring” connection for monitoring & management of all servers in a rack for greater cost savings and management efficiency.

Typical Network Topology

Typical Network Topology

Using CMC & Ring Topology Kit

Using CMC & Ring Topology Kit

GIGABYTE offers free-of-charge management applications via a specialized small processor built on the server.

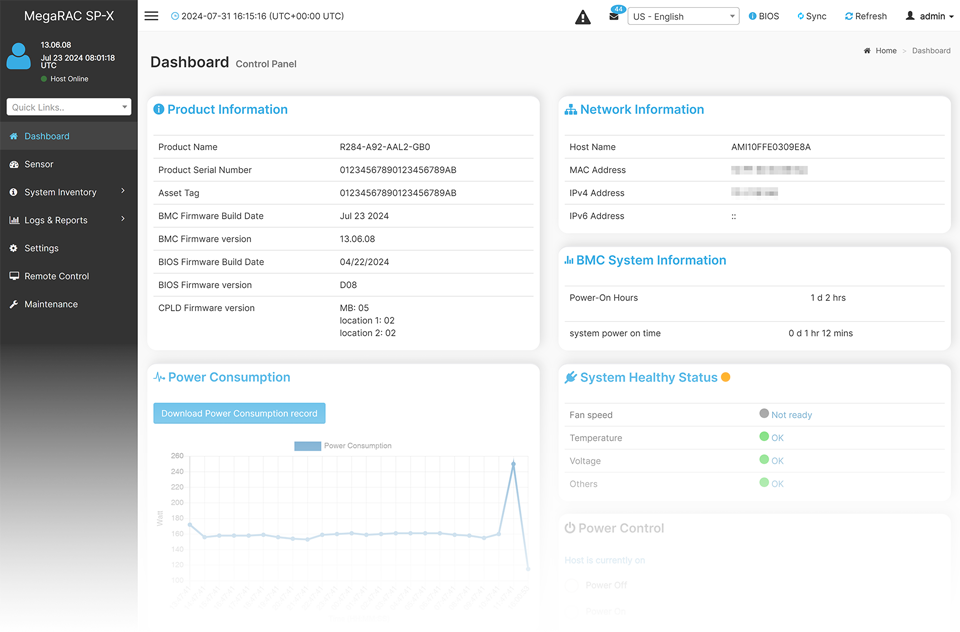

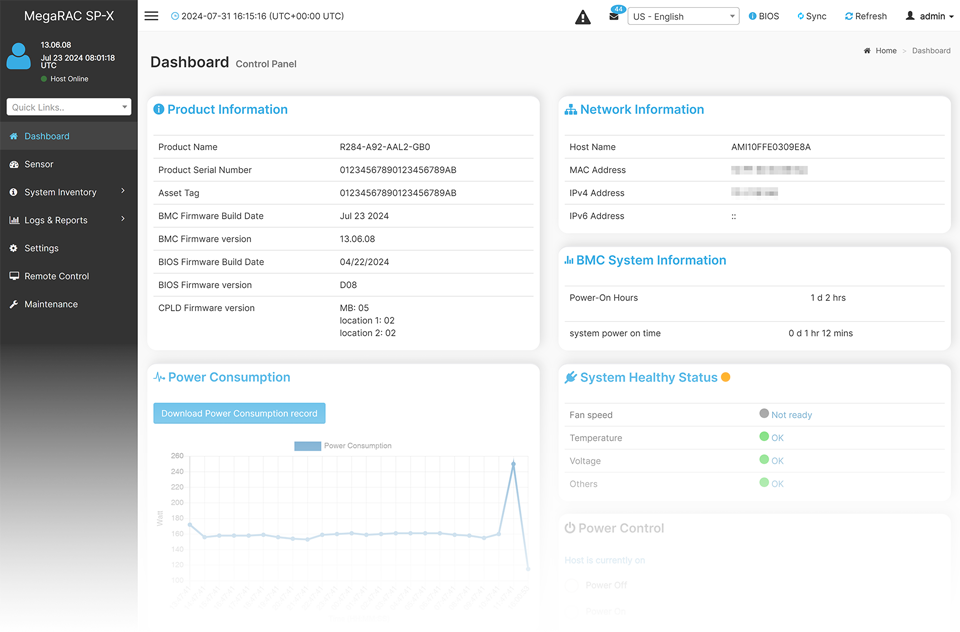

For management and maintenance of a server or a small cluster, users can use the GIGABYTE Management Console, which is pre-installed on each server. Once the servers are running, IT staff can perform real-time health monitoring and management on each server through the browser-based graphical user interface. In addition, the GIGABYTE Management Console also provides:

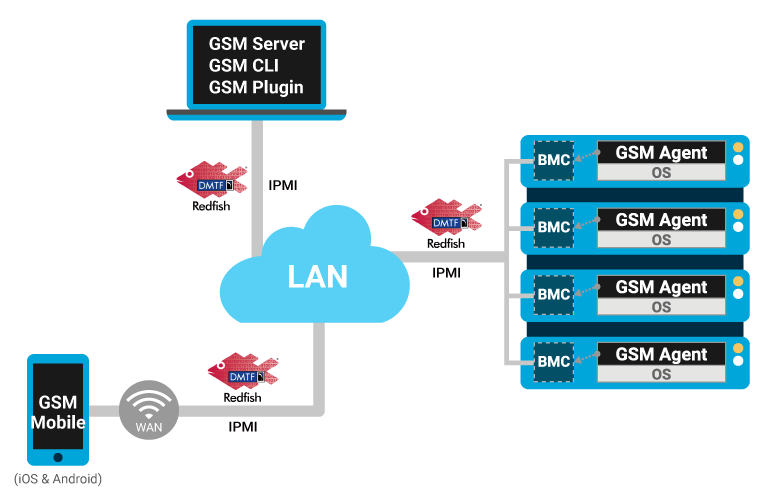

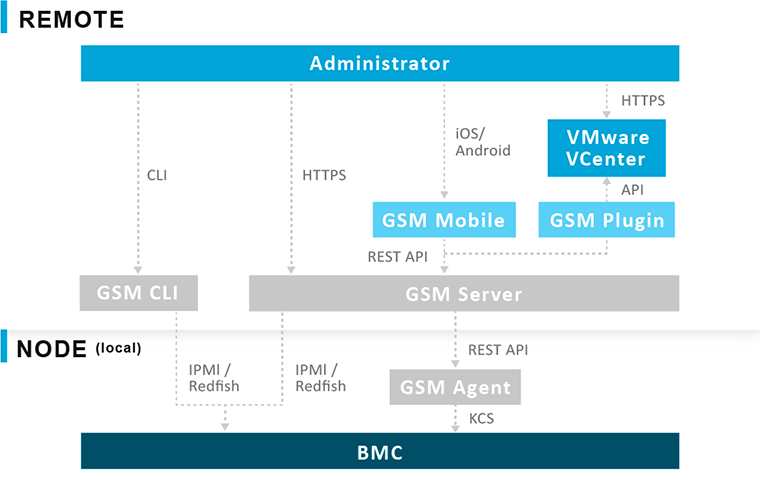

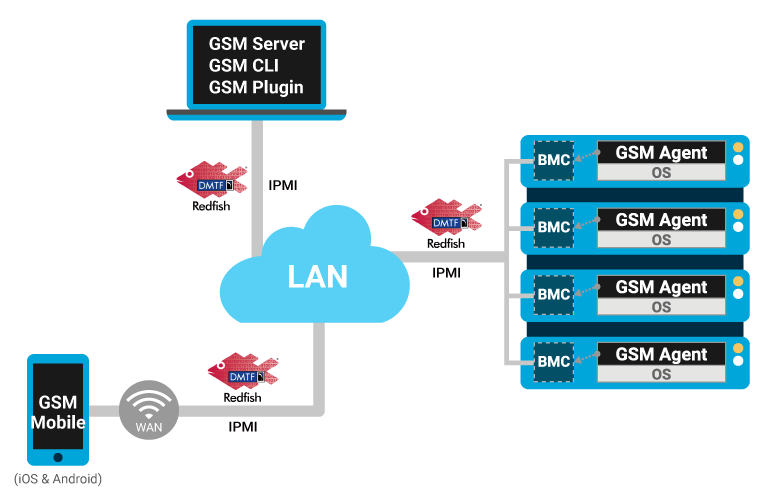

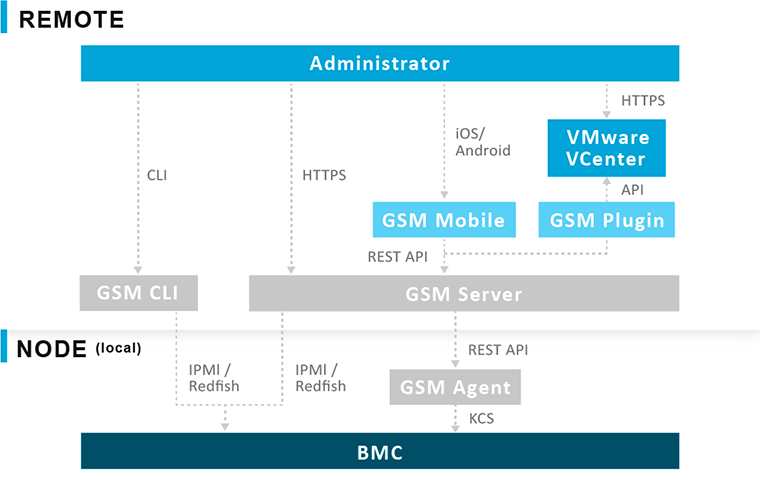

GSM is a software suite that can manage clusters of servers simultaneously over the internet. GSM can be run on all GIGABYTE servers and has support for Windows and Linux. GSM can be downloaded from GIGABYTE website and complies with IPMI and Redfish standards. GSM includes a complete range of system management functions that includes the following utilities:

|

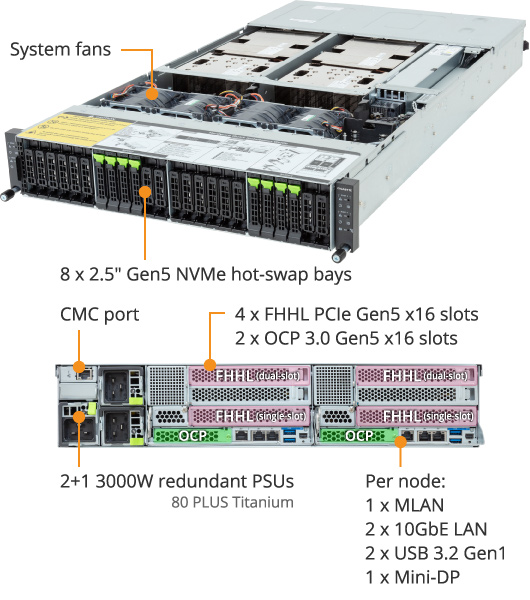

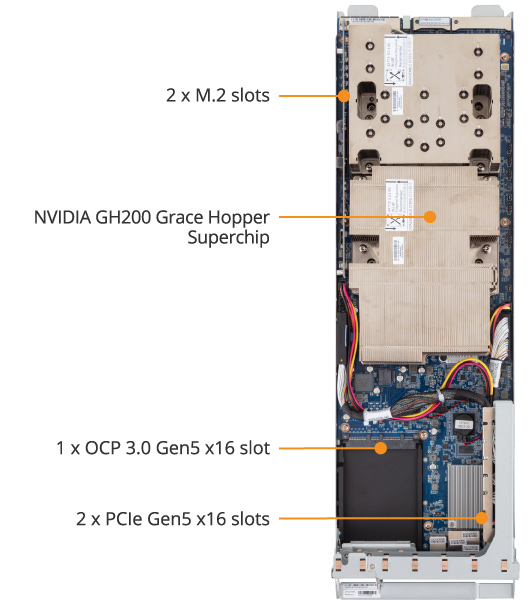

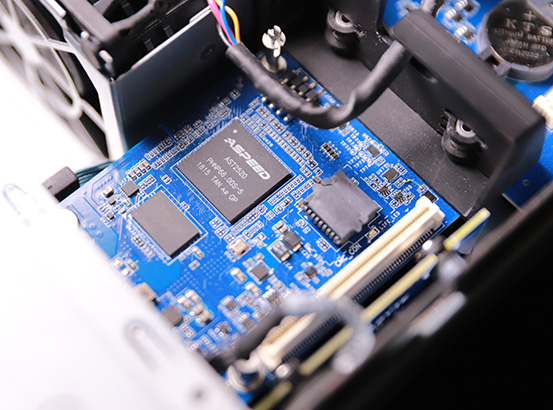

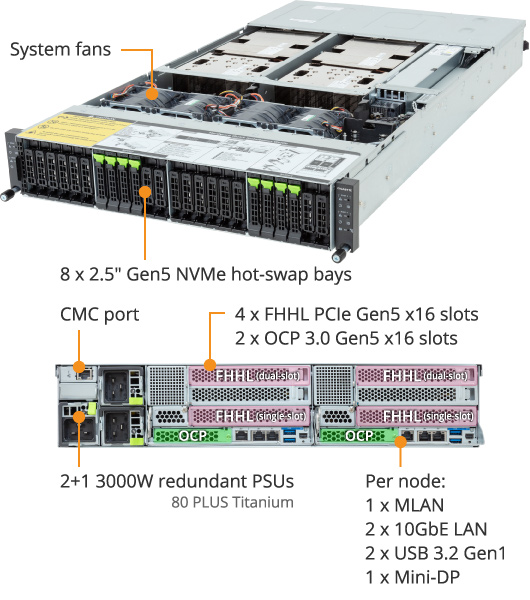

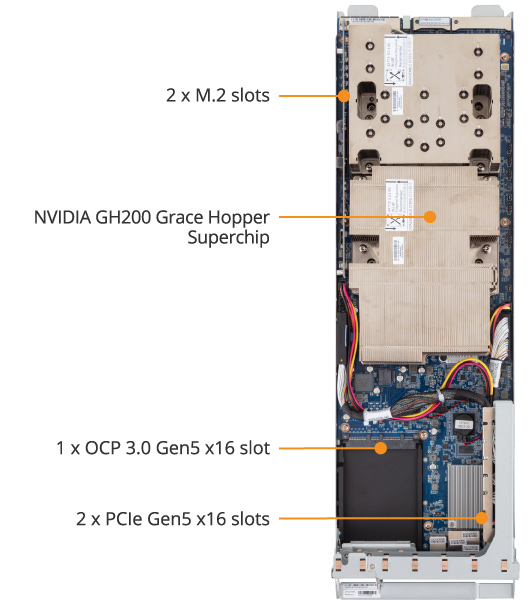

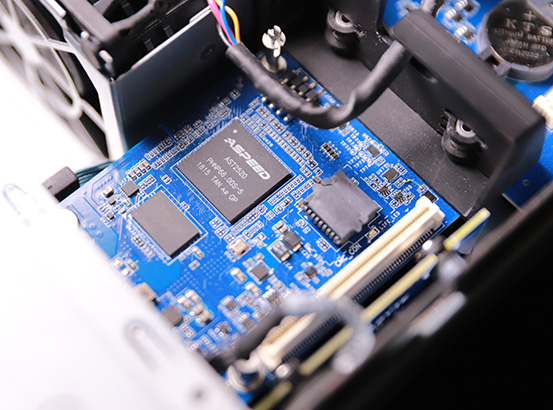

Dimensions (WxHxD, mm) 2U 2-Node - Rear access 440 x 87.5 x 850 Motherboard MV13-HD0 Superchip NVIDIA Grace Hopper Superchip: - 1 x Grace CPU - 1 x Hopper H100 GPU - Connected with NVLink-C2C - TDP up to 1000W (CPU + GPU + memory) Memory Per node: Grace CPU: - Up to 480GB of LPDDR5X memory with ECC - Memory bandwidth up to 512GB/s Hopper H100 GPU: - Up to 96GB HBM3 or 144GB HBM3e (coming soon) - Memory bandwidth up to 4TB/s (or 4.9TB/s) LAN Per node: 2 x 10GbE LAN ports (1 x Intel® X550-AT2) Support NCSI function 1 x Dedicated management port Total: 4 x 10GbE LAN ports (2 x Intel® X550-AT2) Support NCSI function 2 x Dedicated management ports 1 x CMC port Video Integrated in Aspeed® AST2600 2D Video Graphic Adapter with PCIe bus interface 1920x1200@60Hz 32bpp Management chip on CMC board: Integrated in Aspeed® AST2520A2-GP Storage Per node: 4 x 2.5" Gen5 NVMe hot-swappable bays, from PEX89048 Total: 8 x 2.5" Gen5 NVMe hot-swappable bays, from CPU SAS N/A RAID N/A |

Expansion Slots Per node: PCIe cable x 2: - *1 x PCIe x16 (Gen5 x16) FHHL slot (Dual slot), from CPU - 1 x PCIe x16 (Gen5 x16) FHHL slot (Single slot), from CPU 1 x OCP 3.0 slot with PCIe Gen5 x16 bandwidth, from CPU Supports NCSI function 2 x M.2 slots: - M-key - PCIe Gen5 x4, from PEX89048 - Support 2280/22110 cards Total: PCIe cable x 4: - *2 x PCIe x16 (Gen5 x16) FHHL slots (Dual slot), from CPU - 2 x PCIe x16 (Gen5 x16) FHHL slots (Single slot), from CPU 2 x OCP 3.0 slots with PCIe Gen5 x16 bandwidth, from CPU Support NCSI function 4 x M.2 slots: - M-key - PCIe Gen5 x4, from PEX89048 - Support 2280/22110 cards *When BlueField-3 DPUs are installed, the ambient temperature is limited to 25℃. Internal I/O Per node: 1 x TPM header Front I/O Per node: 1 x Power button with LED 1 x ID button with LED 1 x Status LED 1 x System reset button Total: 2 x Power buttons with LED 2 x ID buttons with LED 2 x Status LEDs 2 x System reset buttons *1 x CMC status LED *1 x CMC reset button *Only one CMC status LED and reset button per system. Rear I/O Per node: 2 x USB 3.2 Gen1 1 x Mini-DP 2 x RJ45 1 x MLAN 1 x ID LED Total: 4 x USB 3.2 Gen1 2 x Mini-DP 4 x RJ45 2 x MLAN 2 x ID LEDs *1 x CMC port *Only one CMC port per system. Backplane Board Speed and bandwidth: PCIe Gen5 x4 TPM 1 x TPM header with SPI interface Optional TPM2.0 kit: CTM012 |

Power Supply 2+1 3000W 80 PLUS Titanium redundant power supplies AC Input: - 100-127V~/ 16A, 50/60Hz - 200-207V~/ 16A, 50/60Hz - 208-240V~/ 16A, 50/60Hz DC Input: (Only for China) - 240Vdc/ 16A DC Output: - Max 1200W/ 100-127V~ +12.2V/ 98.36A +12.2Vsb/ 3A - Max 2600W/ 200-207V~ +12.2V/ 213A +12.2Vsb/ 3A - Max 3000W/ 208-240V~ or 240Vdc Input +12.2V/ 245.9A +12.2Vsb/ 3A Note: The system power supply requires C19 power cord. System Management Aspeed® AST2600 management controller GIGABYTE Management Console (AMI MegaRAC SP-X) web interface Dashboard HTML5 KVM Sensor Monitor (Voltage, RPM, Temperature, CPU Status …etc.) Sensor Reading History Data FRU Information SEL Log in Linear Storage / Circular Storage Policy Hardware Inventory Fan Profile System Firewall Power Consumption Power Control Advanced power capping LDAP / AD / RADIUS Support Backup & Restore Configuration Remote BIOS/BMC/CPLD Update Event Log Filter User Management Media Redirection Settings PAM Order Settings SSL Settings SMTP Settings OS Compatibility Please refer to OS compatibility table in support page System Fans 4 x 80x80x80mm (16,500rpm) Operating Properties Operating temperature: 10°C to 35°C Operating humidity: 8-80% (non-condensing) Non-operating temperature: -40°C to 60°C Non-operating humidity: 20%-95% (non-condensing) Packaging Dimensions 1180 x 706 x 306 mm Packaging Content 1 x H223-V10-AAW1 4 x Superchip heatsinks 1 x Mini-DP to D-Sub cable 1 x 3-Section Rail kit Part Numbers - Barebone w/ Nvidia module: 6NH223V10MR000AAW1* - Motherboard: 9MV13HD0NR-000 - 3-Section Rail kit: 25HB2-A66125-K0R - Superchip heatsink: 25ST1-2532Z3-A0R - Superchip heatsink: 25ST1-2532Z5-A0R - Front panel board - CFPH004: 9CFPH004NR-00 - Backplane board - CBPH7O1: 9CBPH7O1NR-00 - Fan module: 25ST2-888020-S1R - M.2 riser card - CMTP0A2: 9CMTP0A2NR-00 - LAN board - CLBH010: 9CLBH010NR-00 - Mini-DP to D-Sub cable: 25CRN-200801-K1R - Power supply: 25EP0-23000L-L0S Optional parts: - RMA packaging: 6NH223V10SR-RMA-A100 |

Moving beyond pure CPU applications, the NVIDIA GH200 Grace Hopper Superchip is built on a combination of an NVIDIA Grace CPU and an NVIDIA H100 GPU for giant-scale AI and HPC applications. Utilizing the same NVIDIA NVLink-Chip-to-Chip (NVLink-C2C) technology, combining the heart of computing on a single superchip, forming the most powerful computational module. The coherent memory design leverages both high-speed HBM3 or HBM3e GPU memory and the large-storage LPDDR5X CPU memory. The superchip also inherits the capability of scaling out with InfiniBand networking by adopting BlueField®-3 DPUs or NICs, forming a system connected with a speed of 100GB/s for ML and HPC workloads. The upcoming GH200 NVL32 can further improve deep learning and HPC workloads by connecting up to 32 superchips through the NVLink Switch System, a system built on NVLink switches with 900GB/s bandwidth between any two superchips, making the most use of the powerful computing chips and extended GPU memory.

Armv9.0-A architecture for better compatibility and easier execution of other Arm-based binaries.

Balancing between bandwidth, energy efficiency, capacity, and cost with the first data center implementation of LPDDR technology.

Adoption of high-bandwidth-memory for improved performance of memory-intensive workloads.

Multiple PCIe Gen5 links for flexible add-in cards configurations and system communication.

Industry-leading chip-to-chip interconnect technology up to 900GB/s, alleviating bottlenecks and making coherent memory interconnect possible.

Designed with maximum scale-out capability with up to 100GB/s total bandwidth across all superchips through InfiniBand switches, BlueField-3 DPUs, and ConnectX-7 NICs.

Bringing preferred programming languages to the CUDA platform along with hardware-accelerated memory coherency for simple adaptation to the new platform.

GIGABYTE servers are enabled with Automatic Fan Speed Control to achieve the best cooling and power efficiency. Individual fan speeds will be automatically adjusted according to temperature sensors strategically placed in the servers.

To take advantage of the fact that a PSU will run at greater power efficiency with a higher load, GIGABYTE has introduced a power management feature called Cold Redundancy for servers with N+1 power supplies. When the total system load falls lower than 40%, the system will automatically place one PSU into standby mode, resulting in a 10% improvement in efficiency.

To prevent server downtime and data loss as a result of loss of AC power, GIGABYTE implements SmaRT in all our server platforms. When such an event occurs, the system will throttle while maintaining availability and reducing power load. Capacitors within the power supply can supply power for 10-20ms, which is enough time to transition to a backup power source for continued operation.

SCMP is a GIGABYTE patented feature which is deployed in servers with non-fully redundant PSU design. With SCMP, in the event of faulty PSU or overheated system, the system will force the CPU into an ultra-low power mode that reduces the power load, which prevents the system from unexpected shutdown and avoids component damage or data loss.

For hardware-based authentication, the passwords, encryption keys, and digital certificates are stored in a TPM module to prevent unwanted users from gaining access to your data. GIGABYTE TPM modules come in either a Serial Peripheral Interface or Low Pin Count bus.

Clipping mechanism secures the drive in place. Install or replace a new drive in seconds.

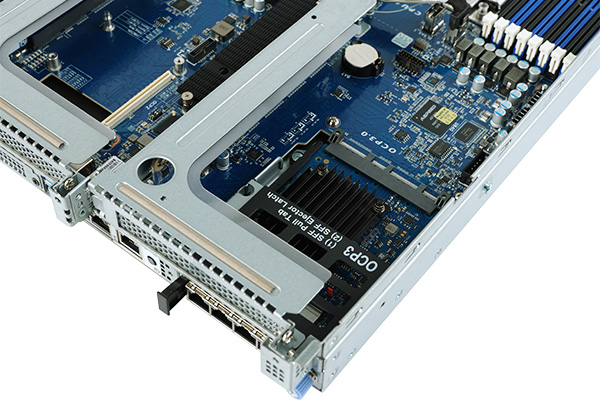

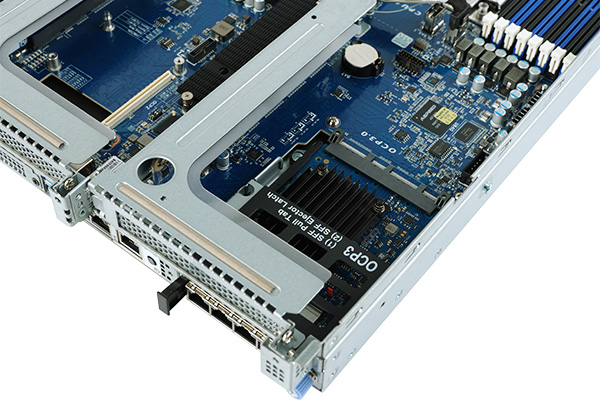

GIGABYTE offers servers that feature an onboard OCP 3.0 slot for the next generation of add on cards. Advantages of this new type include:

GIGABYTE H-Series servers feature CMC* for chassis-level management and multi-node monitoring by connecting nodes internally via BMC integrated on each node to the CMC. This results in easier managment as there are less cables and network switch connections needed.

* IPMI only, no iKVM functionality

GIGABYTE H-Series servers feature the ability to create a “ring” connection for monitoring & management of all servers in a rack for greater cost savings and management efficiency.

Typical Network Topology

Typical Network Topology

Using CMC & Ring Topology Kit

Using CMC & Ring Topology Kit

GIGABYTE offers free-of-charge management applications via a specialized small processor built on the server.

For management and maintenance of a server or a small cluster, users can use the GIGABYTE Management Console, which is pre-installed on each server. Once the servers are running, IT staff can perform real-time health monitoring and management on each server through the browser-based graphical user interface. In addition, the GIGABYTE Management Console also provides:

GSM is a software suite that can manage clusters of servers simultaneously over the internet. GSM can be run on all GIGABYTE servers and has support for Windows and Linux. GSM can be downloaded from GIGABYTE website and complies with IPMI and Redfish standards. GSM includes a complete range of system management functions that includes the following utilities:

|

Dimensions (WxHxD, mm) 2U 2-Node - Rear access 440 x 87.5 x 850 Motherboard MV13-HD0 Superchip NVIDIA Grace Hopper Superchip: - 1 x Grace CPU - 1 x Hopper H100 GPU - Connected with NVLink-C2C - TDP up to 1000W (CPU + GPU + memory) Memory Per node: Grace CPU: - Up to 480GB of LPDDR5X memory with ECC - Memory bandwidth up to 512GB/s Hopper H100 GPU: - Up to 96GB HBM3 or 144GB HBM3e (coming soon) - Memory bandwidth up to 4TB/s (or 4.9TB/s) LAN Per node: 2 x 10GbE LAN ports (1 x Intel® X550-AT2) Support NCSI function 1 x Dedicated management port Total: 4 x 10GbE LAN ports (2 x Intel® X550-AT2) Support NCSI function 2 x Dedicated management ports 1 x CMC port Video Integrated in Aspeed® AST2600 2D Video Graphic Adapter with PCIe bus interface 1920x1200@60Hz 32bpp Management chip on CMC board: Integrated in Aspeed® AST2520A2-GP Storage Per node: 4 x 2.5" Gen5 NVMe hot-swappable bays, from PEX89048 Total: 8 x 2.5" Gen5 NVMe hot-swappable bays, from CPU SAS N/A RAID N/A |

Expansion Slots Per node: PCIe cable x 2: - *1 x PCIe x16 (Gen5 x16) FHHL slot (Dual slot), from CPU - 1 x PCIe x16 (Gen5 x16) FHHL slot (Single slot), from CPU 1 x OCP 3.0 slot with PCIe Gen5 x16 bandwidth, from CPU Supports NCSI function 2 x M.2 slots: - M-key - PCIe Gen5 x4, from PEX89048 - Support 2280/22110 cards Total: PCIe cable x 4: - *2 x PCIe x16 (Gen5 x16) FHHL slots (Dual slot), from CPU - 2 x PCIe x16 (Gen5 x16) FHHL slots (Single slot), from CPU 2 x OCP 3.0 slots with PCIe Gen5 x16 bandwidth, from CPU Support NCSI function 4 x M.2 slots: - M-key - PCIe Gen5 x4, from PEX89048 - Support 2280/22110 cards *When BlueField-3 DPUs are installed, the ambient temperature is limited to 25℃. Internal I/O Per node: 1 x TPM header Front I/O Per node: 1 x Power button with LED 1 x ID button with LED 1 x Status LED 1 x System reset button Total: 2 x Power buttons with LED 2 x ID buttons with LED 2 x Status LEDs 2 x System reset buttons *1 x CMC status LED *1 x CMC reset button *Only one CMC status LED and reset button per system. Rear I/O Per node: 2 x USB 3.2 Gen1 1 x Mini-DP 2 x RJ45 1 x MLAN 1 x ID LED Total: 4 x USB 3.2 Gen1 2 x Mini-DP 4 x RJ45 2 x MLAN 2 x ID LEDs *1 x CMC port *Only one CMC port per system. Backplane Board Speed and bandwidth: PCIe Gen5 x4 TPM 1 x TPM header with SPI interface Optional TPM2.0 kit: CTM012 |

Power Supply 2+1 3000W 80 PLUS Titanium redundant power supplies AC Input: - 100-127V~/ 16A, 50/60Hz - 200-207V~/ 16A, 50/60Hz - 208-240V~/ 16A, 50/60Hz DC Input: (Only for China) - 240Vdc/ 16A DC Output: - Max 1200W/ 100-127V~ +12.2V/ 98.36A +12.2Vsb/ 3A - Max 2600W/ 200-207V~ +12.2V/ 213A +12.2Vsb/ 3A - Max 3000W/ 208-240V~ or 240Vdc Input +12.2V/ 245.9A +12.2Vsb/ 3A Note: The system power supply requires C19 power cord. System Management Aspeed® AST2600 management controller GIGABYTE Management Console (AMI MegaRAC SP-X) web interface Dashboard HTML5 KVM Sensor Monitor (Voltage, RPM, Temperature, CPU Status …etc.) Sensor Reading History Data FRU Information SEL Log in Linear Storage / Circular Storage Policy Hardware Inventory Fan Profile System Firewall Power Consumption Power Control Advanced power capping LDAP / AD / RADIUS Support Backup & Restore Configuration Remote BIOS/BMC/CPLD Update Event Log Filter User Management Media Redirection Settings PAM Order Settings SSL Settings SMTP Settings OS Compatibility Please refer to OS compatibility table in support page System Fans 4 x 80x80x80mm (16,500rpm) Operating Properties Operating temperature: 10°C to 35°C Operating humidity: 8-80% (non-condensing) Non-operating temperature: -40°C to 60°C Non-operating humidity: 20%-95% (non-condensing) Packaging Dimensions 1180 x 706 x 306 mm Packaging Content 1 x H223-V10-AAW1 4 x Superchip heatsinks 1 x Mini-DP to D-Sub cable 1 x 3-Section Rail kit Part Numbers - Barebone w/ Nvidia module: 6NH223V10MR000AAW1* - Motherboard: 9MV13HD0NR-000 - 3-Section Rail kit: 25HB2-A66125-K0R - Superchip heatsink: 25ST1-2532Z3-A0R - Superchip heatsink: 25ST1-2532Z5-A0R - Front panel board - CFPH004: 9CFPH004NR-00 - Backplane board - CBPH7O1: 9CBPH7O1NR-00 - Fan module: 25ST2-888020-S1R - M.2 riser card - CMTP0A2: 9CMTP0A2NR-00 - LAN board - CLBH010: 9CLBH010NR-00 - Mini-DP to D-Sub cable: 25CRN-200801-K1R - Power supply: 25EP0-23000L-L0S Optional parts: - RMA packaging: 6NH223V10SR-RMA-A100 |